Download and use Office 2016 for FREE without a product key

Office 2016 is the latest version of the Microsoft Office productivity suite, succeeding Office 2013. New features of Office 16 include the ability to manage and work with files in Microsoft OneDrive from the lock screen, a powerful search tool for support and commands called “Tell me” and co-authoring mode with users connected to Office Online.

Download original Office 2016 ISO

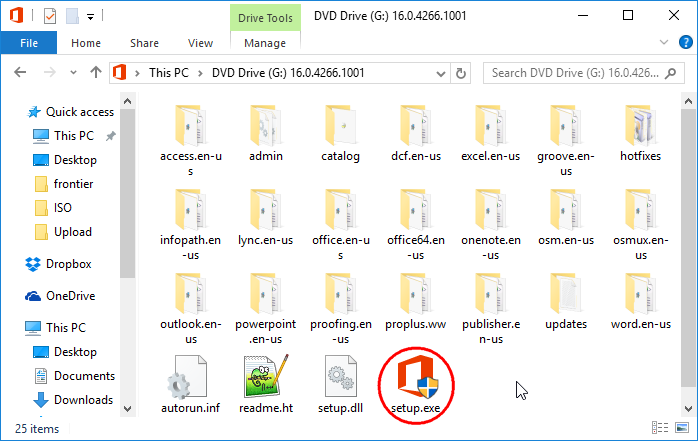

You can get the latest version of Microsoft Office here if you do not have it. Once your download is complete, you need to extract the original ISO image from the zip file. You will be left with a file named “SW_DVD5_Office_Professional_Plus_2016_W32_English…” (W32 here in the filename refers to the 32-bit version if you download the 64-bit version, the filename will contain 64Bit instead). Although an ISO file is used to burn CD/DVD or a USB flash drive, you can install Office 2016 without burning it using 7-Zip or similar archive software utility. Here is what you get after extracting the ISO file.

Installing Microsoft Office 2016

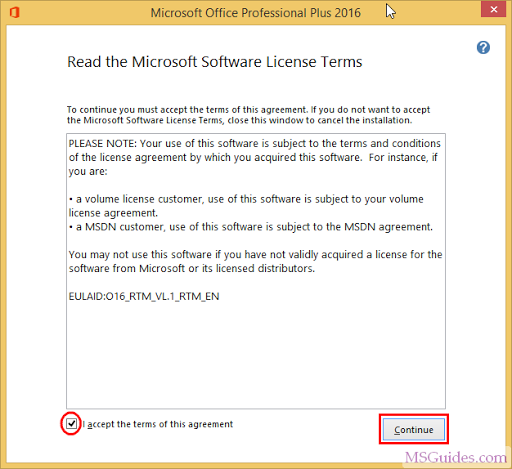

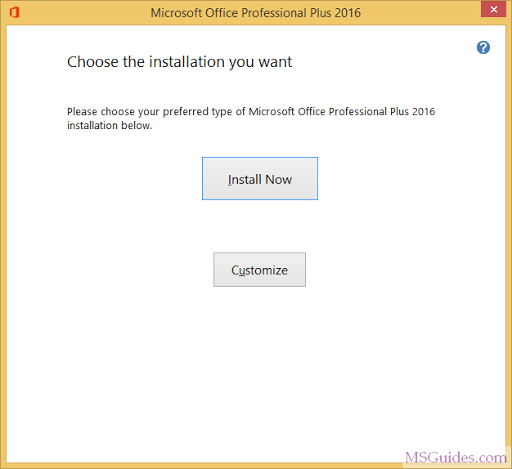

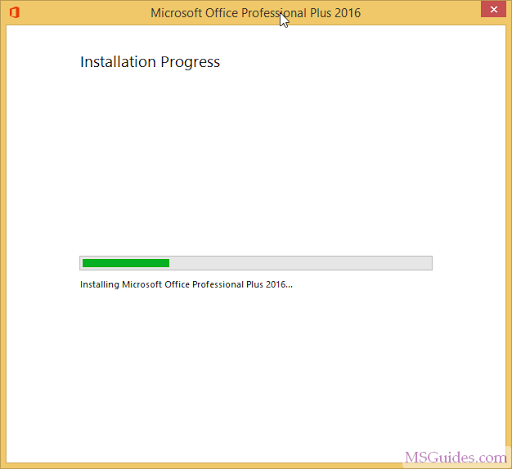

Simply run the setup file (named setup.exe) to install Office on your Windows.

Note: By default, Office will be installed in “C:\Program Files”.

Done!

Activate all versions of Office 2016 for FREE permanently

1. Using KMS client key to activate your Office manually

First, you need to open command prompt with admin rights, then follow the instruction below step by step. Just copy/paste the commands and do not forget to hit Enter in order to execute them.

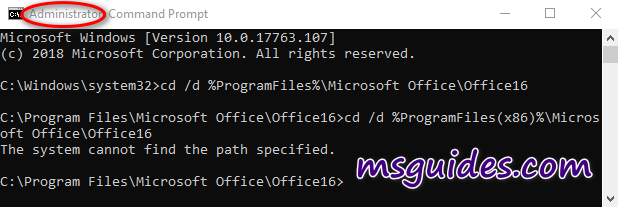

Step 1.1: Find the Office location in your Windows and move to there.

If you install your Office in the ProgramFiles folder, the path will be “%ProgramFiles%\Microsoft Office\Office16” or “%ProgramFiles(x86)%\Microsoft Office\Office16”. It depends on the architecture of the Windows OS you are using. If you are not sure of this issue, don’t worry, just run both of the commands above. One of them will be not executed and an error message will be printed on the screen.

cd /d %ProgramFiles%\Microsoft Office\Office16

cd /d %ProgramFiles(x86)%\Microsoft Office\Office16

Step 1.2: Convert your Office to volume version if you are using retail one.

If your Office is got from Microsoft, this step is required. On the contrary, if you install Office from a Volume ISO file, this is optional so just skip it if you want.

for /f %x in ('dir /b ..\root\Licenses16\proplusvl_kms*.xrm-ms') do cscript ospp.vbs /inslic:"..\root\Licenses16\%x"

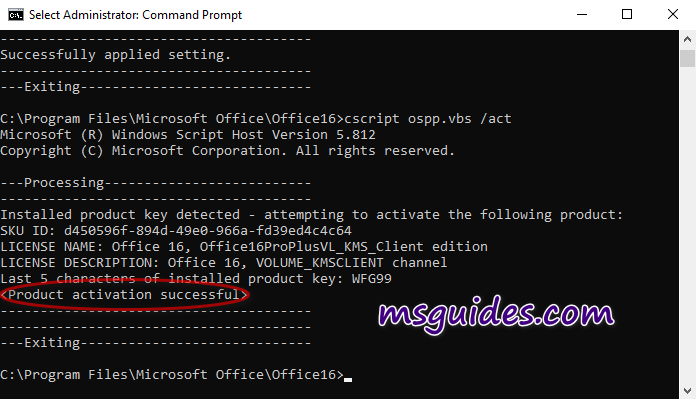

Step 1.3: Install Office client key and activate your Office.

Make sure your PC is connected to the internet, then run the following command.

cscript ospp.vbs /inpkey:XQNVK-8JYDB-WJ9W3-YJ8YR-WFG99

cscript ospp.vbs /unpkey:BTDRB >nul

cscript ospp.vbs /unpkey:KHGM9 >nul

cscript ospp.vbs /unpkey:CPQVG >nul

cscript ospp.vbs /sethst:107.175.77.7

cscript ospp.vbs /setprt:1688

cscript ospp.vbs /act

If you see the error 0xC004F074, it means that your internet connection is unstable or the server is busy. Please make sure your device is online and try the command “act” again until you succeed.

Here is all the text you will get in the command prompt window.

C:\Windows\system32>cd /d %ProgramFiles%\Microsoft Office\Office16

C:\Program Files\Microsoft Office\Office16>cd /d %ProgramFiles(x86)%\Microsoft Office\Office16

The system cannot find the path specified.

C:\Program Files\Microsoft Office\Office16>for /f %x in ('dir /b ..\root\Licenses16\proplusvl_kms*.xrm-ms') do cscript ospp.vbs /inslic:"..\root\Licenses16\%x"

C:\Program Files\Microsoft Office\Office16>cscript ospp.vbs /inslic:"..\root\Licenses16\ProPlusVL_KMS_Client-ppd.xrm-ms"

Microsoft (R) Windows Script Host Version 5.812

Copyright (C) Microsoft Corporation. All rights reserved.

---Processing--------------------------

Installing Office license: ..\root\licenses16\proplusvl_kms_client-ppd.xrm-ms

Office license installed successfully.

---Exiting-----------------------------

C:\Program Files\Microsoft Office\Office16>cscript ospp.vbs /inslic:"..\root\Licenses16\ProPlusVL_KMS_Client-ul-oob.xrm-ms"

Microsoft (R) Windows Script Host Version 5.812

Copyright (C) Microsoft Corporation. All rights reserved.

---Processing--------------------------

Installing Office license: ..\root\licenses16\proplusvl_kms_client-ul-oob.xrm-ms

Office license installed successfully.

---Exiting-----------------------------

C:\Program Files\Microsoft Office\Office16>cscript ospp.vbs /inslic:"..\root\Licenses16\ProPlusVL_KMS_Client-ul.xrm-ms"

Microsoft (R) Windows Script Host Version 5.812

Copyright (C) Microsoft Corporation. All rights reserved.

---Processing--------------------------

Installing Office license: ..\root\licenses16\proplusvl_kms_client-ul.xrm-ms

Office license installed successfully.

---Exiting-----------------------------

C:\Program Files\Microsoft Office\Office16>cscript ospp.vbs /inpkey:XQNVK-8JYDB-WJ9W3-YJ8YR-WFG99

Microsoft (R) Windows Script Host Version 5.812

Copyright (C) Microsoft Corporation. All rights reserved.

---Processing--------------------------

---Exiting-----------------------------

C:\Program Files\Microsoft Office\Office16>cscript ospp.vbs /unpkey:BTDRB >nul

C:\Program Files\Microsoft Office\Office16>cscript ospp.vbs /unpkey:KHGM9 >nul

C:\Program Files\Microsoft Office\Office16>cscript ospp.vbs /unpkey:CPQVG >nul

C:\Program Files\Microsoft Office\Office16>cscript ospp.vbs /sethst:107.175.77.7

Microsoft (R) Windows Script Host Version 5.812

Copyright (C) Microsoft Corporation. All rights reserved.

---Processing--------------------------

Successfully applied setting.

---Exiting-----------------------------

C:\Program Files\Microsoft Office\Office16>cscript ospp.vbs /setprt:1688

Microsoft (R) Windows Script Host Version 5.812

Copyright (C) Microsoft Corporation. All rights reserved.

---Processing--------------------------

Successfully applied setting.

---Exiting-----------------------------

C:\Program Files\Microsoft Office\Office16>cscript ospp.vbs /act

Microsoft (R) Windows Script Host Version 5.812

Copyright (C) Microsoft Corporation. All rights reserved.

---Processing--------------------------

Installed product key detected - attempting to activate the following product:

SKU ID: d450596f-894d-49e0-966a-fd39ed4c4c64

LICENSE NAME: Office 16, Office16ProPlusVL_KMS_Client edition

LICENSE DESCRIPTION: Office 16, VOLUME_KMSCLIENT channel

Last 5 characters of installed product key: WFG99

---Exiting-----------------------------

C:\Program Files\Microsoft Office\Office16>

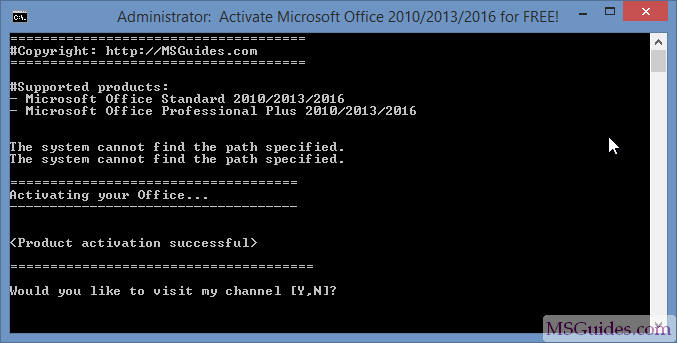

2. Using prewritten batch script

This one is not recommended anymore due to the new update of Microsoft.

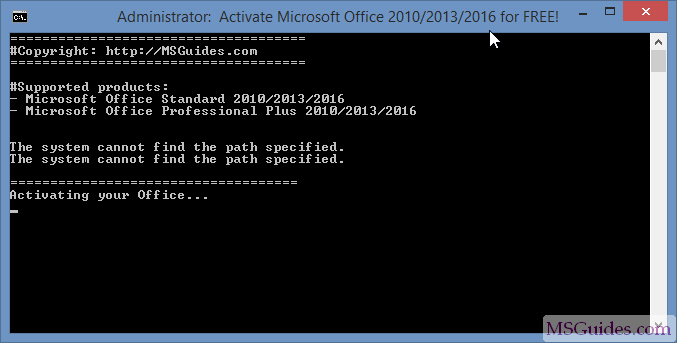

Supported products:

- Microsoft Office Standard 2016

- Microsoft Office Professional Plus 2016

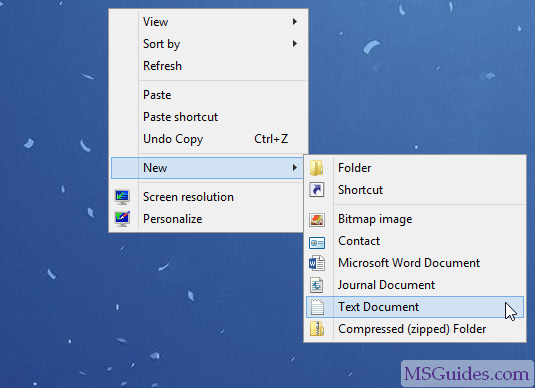

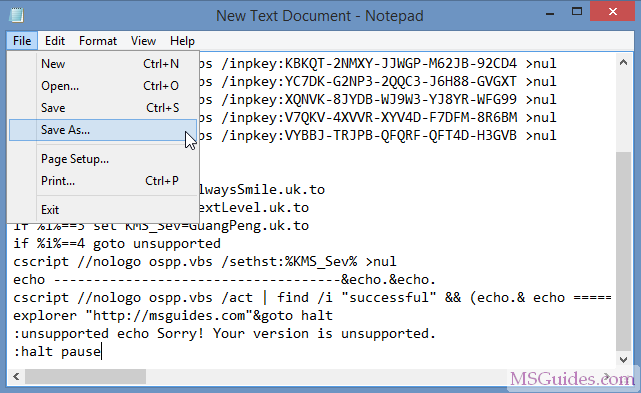

Step 2.1: Copy the code below into a new text document.

@echo off

title Activate Microsoft Office 2016 (ALL versions) for FREE - MSGuides.com&cls&echo =====================================================================================&echo #Project: Activating Microsoft software products for FREE without additional software&echo =====================================================================================&echo.&echo #Supported products:&echo - Microsoft Office Standard 2016&echo - Microsoft Office Professional Plus 2016&echo.&echo.&(if exist "%ProgramFiles%\Microsoft Office\Office16\ospp.vbs" cd /d "%ProgramFiles%\Microsoft Office\Office16")&(if exist "%ProgramFiles(x86)%\Microsoft Office\Office16\ospp.vbs" cd /d "%ProgramFiles(x86)%\Microsoft Office\Office16")&(for /f %%x in ('dir /b ..\root\Licenses16\proplusvl_kms*.xrm-ms') do cscript ospp.vbs /inslic:"..\root\Licenses16\%%x" >nul)&(for /f %%x in ('dir /b ..\root\Licenses16\proplusvl_mak*.xrm-ms') do cscript ospp.vbs /inslic:"..\root\Licenses16\%%x" >nul)&echo.&echo ============================================================================&echo Activating your Office...&cscript //nologo ospp.vbs /setprt:1688 >nul&cscript //nologo ospp.vbs /unpkey:WFG99 >nul&cscript //nologo ospp.vbs /unpkey:DRTFM >nul&cscript //nologo ospp.vbs /unpkey:BTDRB >nul&cscript //nologo ospp.vbs /unpkey:CPQVG >nul&set i=1&cscript //nologo ospp.vbs /inpkey:XQNVK-8JYDB-WJ9W3-YJ8YR-WFG99 >nul||goto notsupported

:skms

if %i% GTR 10 goto busy

if %i% EQU 1 set KMS=kms7.MSGuides.com

if %i% EQU 2 set KMS=107.175.77.7

if %i% GTR 2 goto ato

cscript //nologo ospp.vbs /sethst:%KMS% >nul

:ato

echo ============================================================================&echo.&echo.&cscript //nologo ospp.vbs /act | find /i "successful" && (echo.&echo ============================================================================&echo.&echo #My official blog: MSGuides.com&echo.&echo #How it works: bit.ly/kms-server&echo.&echo #Please feel free to contact me at [email protected] if you have any questions or concerns.&echo.&echo #Please consider supporting this project: donate.msguides.com&echo #Your support is helping me keep my servers running 24/7!&echo.&echo ============================================================================&choice /n /c YN /m "Would you like to visit my blog [Y,N]?" & if errorlevel 2 exit) || (echo The connection to my KMS server failed! Trying to connect to another one... & echo Please wait... & echo. & echo. & set /a i+=1 & goto skms)

explorer "http://MSGuides.com"&goto halt

:notsupported

echo ============================================================================&echo.&echo Sorry, your version is not supported.&echo.&goto halt

:busy

echo ============================================================================&echo.&echo Sorry, the server is busy and can't respond to your request. Please try again.&echo.

:halt

pause >nulCreate a new text document.

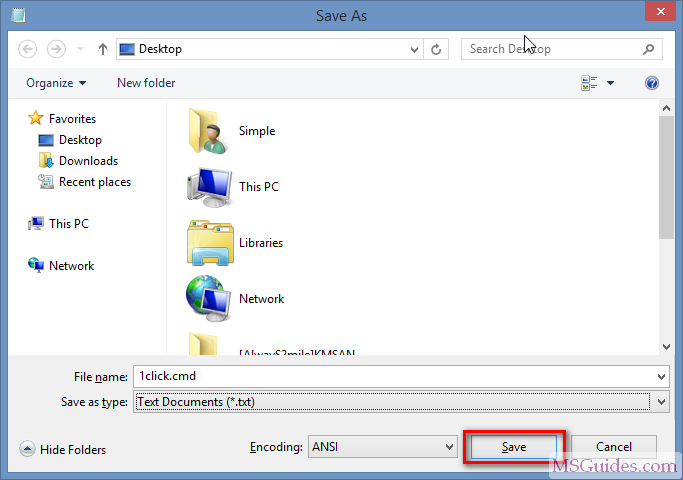

Step 2.2: Paste the code into the text file. Then save it as a batch file (named “1click.cmd”).

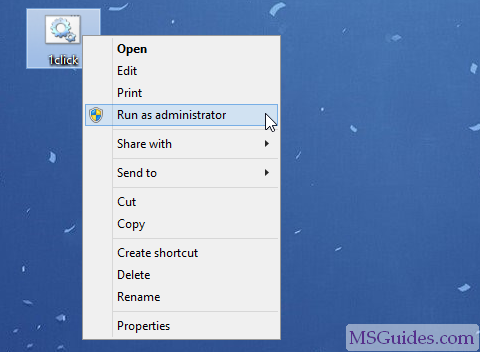

Step 2.3: Run the batch file as administrator.

Please wait…

Done!

Check the activation status again.

If you would have any questions or concerns, please leave your comments. I would be glad to explain in more details. Thank you so much for all your feedback and support!

Thank you, it worked.

The Software Licensing Service reported that the action requires administrator privilege.

this is what im getting

After a week or two of usage a message is now popping up that the product is not genuine. Anoying as h**l.

OSPP.VBS not found showing how to solve it ?

olá, fiz isto hoje e não funcionou, tem outra atualização?

Defender on windows 11 sees this as a trojan file. Trojan: 32/leonem. Even if I save with another name the story remains the same.

same here

Thank you so much! I was reluctant at first but your instructions are straightforward and easy. You are an angel! G*d bless you and the works you are doing.

buenas tardes

ya realice el proceso pero no me permite realizar la activacion

alguna otra opcion

gracias por su informacion estare en espera de su respuesta

Tudo certo, até entrar no Word. Já no Word, pede que eu inicie um plano pago. É como se nada tivesse acontecido.

Ele náo está mais liberando para minha conta disse que dá erro. Eles identificaram que era um c*****r e não consigo mais usar.

Perfect second step is 100% working

Thanks so much for the help

thank you so so much

Input Error: Can not find script file “C:\Program Files (x86)\Microsoft Office\Office16\ospp.vbs”

getting this error.

if this happens just make sure that the Microsft office is installed in the windows not just the word excel notes power point are install cause in other case its not preinstalled so you need to manually installed it

Do your need Internet for it to work? Because mine ain’t showing on the command prompt at all.

thanks working in 2024.

my antvirus detected trojan leonem

same for me..

just disable the real-time protection before creating the script and enable it after you successfully activated the msoffice

it is so good program

Não deu certo por aqui. Aparece que minha versão não suporta. Tem algo que eu possa fazer?

Hi, I downloaded the file and installed office correctly, but when activating the command, the ospp.vbs file can’t be found, I’ve tried everything, reinstalled it and nothing like this file can be found, can anyone help me please?

Worked fine, Thanks!

I run with error: “The connection to my KMS server failed! Trying to connect to another one…”. What shoud I do?

thanks

thanks alot sir